Of the numerous changes to the implements for creating 2D images and 3D models, one of the most radical has been the recent adoption of WIMP interfaces. Ironically, there is good reason to believe that WIMP interaction for 3D modeling is actually inferior to the real-world interfaces (pencils, large sheets of paper, clay, paint palettes) that it supplants. In fact, WIMP interaction's principal benefit is its straightforward integration with computer 3D-model representations which have many advantages including ease of transformation, archival, replication and distribution.

Instead of interpreting user compliance as an affirmation of WIMP interaction, rather we explore the dichotomy of how easy it is to depict a 3D object with just a pencil and paper, and how hard it is to model the same object using a multi-thousand dollar workstation. Our challenge is to blend the essence of pencil sketching interfaces with the power of computer model representations.

This paper overviews ongoing research in "sketch-like" 3D modeling user interfaces. The objective of this research is to design interfaces that match human ergonomics, exploit pre-learned skills, foster new skills, and support the transition from novice to skilled expert. Thus pencil sketching is an interaction ideal, supporting users ranging from children to adults, and from doodlers to artists. Perhaps the best testament to the effectiveness of the pencil and paper interface is that very few people even consider it a user interface.

Given the desire for a sketch-like interface, an obvious approach is to employ a 3D model reconstruction algorithm, as surveyed by by Wang and Grinstein [14], directly on an image of a real pencil sketch. Although valuable, this approach has two shortcomings. The first is that the process of scanning a completed drawing inherently cannot utilize any of the advantages of computer-based models during the modeling process. The second is that interpreting an arbitrary drawing as an unambiguous and meaningful 3D model is unsolved in the general case, especially since drawings typically contain only visible surface information.

A more popular alternative to literally using pencil sketches is to interactively update a 3D model based on 2D line drawing input. Branco's system [4] and Pugh's Viking [12], for example, utilize inferencing engines to continuously maintain a valid 3D model as a user draws input lines. Although these approaches are fundamentally well-grounded, they strongly deviate from the ideal of pencil sketching by requiring frequent interaction with WIMP interface components. In addition, these systems focus primarily on the narrow algorithmic perspective of reconstructing a 3D model from line input and not on the entire user experience of creating a 3D model that includes I/O devices and broad tasks such as model parameterization, and camera and object manipulation.

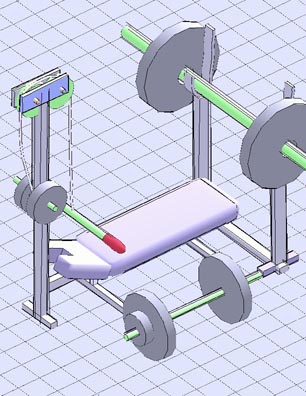

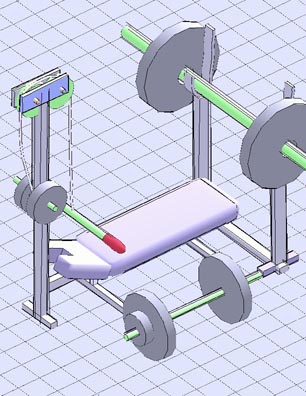

The rest of this paper reports some of the key issues encountered during the development of a number of 3D modeling systems derived from the SKETCH system [16] (See figure 1). For the purposes of this paper, the term Sketch refers to SKETCH or any of its derivative systems. The following three sections overview Sketch design issues relating to human-centered I/O, precision, and scalability.

An effective interface for creating 3D models must address a range of human centered design issues covering both ergonomic and perceptual factors. Sketch interfaces have mainly focused on three interface concepts: emphasizing 2D interaction, co-locating interaction and display, and interacting with idiomatic gestures.

Although it might appear for the task of 3D modeling that a 3D or 6D input device would naturally be superior to a 2D input device, this assumption is difficult to substantiate. In general, the lack of a haptic support surface for 3D and 6D input devices makes it difficult for users to work from a convenient resting position or to exploit their precision fine-motor control skills. The use of haptic 6D input devices for 3D modeling, such as SensAble Technology's PHANTOM, is an interesting and unproven research direction that we are exploring.

Thus, Sketch interfaces are fundamentally based on exploiting the intuition people have for drawing 2D renderings of 3D models using stylus (or mouse) input devices. Stylus devices have the advantage of being both practical and ideally suited to drawing operations. Sketch interfaces additionally require that at least two buttons be mounted along the stylus shaft to facilitate mode-switching. We use a Wacom stylus that also has an eraser mounted on the end that can be distinguished as third button.

However, some intrinsically 3D operations, such as viewing an object, are far more easily performed and comprehended when using 6D input devices. The ErgoSketch [6] system was therefore designed to optimize the use of interaction devices by providing seamless transitions between 2D stylus and stereoscopic 6D interactions. ErgoSketch users create detailed 3D geometry by drawing with a 2D stylus on a large rear-projected display screen, and then automatically transition to a stereoscopic view by picking up a 6D tracker from the desktop. The 6D tracker is a physical proxy that enables users to "hold" 3D geometry in their hand for better viewing. While holding 3D geometry, users can also enter 3D annotations by drawing with second 6D tracker attached to a pen.

Despite the fact that people begin at a very early age to interact with objects directly on a flat surface such as a floor or tabletop, the typical computer setup displaces the interaction and display surfaces and rotates them by 90 degrees. For some tasks, this arrangement is acceptable and perhaps preferable; however for accurate, spatial, motor-control operations, there is no evidence to indicate anything but a performance disadvantage for displaced I/O surfaces. In an effort to imitate two of the form factors commonly used by artists, Sketch was designed to run on either a notebook-sized, 800x600 pixel Wacom PL-300 LCD display tablet or on a 1280x1024 pixel, drafting table-sized, rear-projected ActiveDesk, manufactured by ITI of Toronto [7]. Both of these displays support 2D stylus input devices that closely approximate conventional pens.

By co-locating I/O surfaces, Sketch better leverages pre-existing drawing skills and eye-hand coordination. Additionally, co-located I/O surfaces naturally support a range of important bi-manual interactions, that simplify operations ranging from 3D camera control to drawing gesture lines [15]. Although we have not conducted our own comparative studies, Guiard demonstrated the importance of similar bimanual interaction in conventional handwriting tasks where subjects performed better when they used their second hand to re-orient a piece of paper as they wrote on it [8].

In practice, interfaces using co-located I/O must overcome the added difficulty of interference. For example, marking menus [10] are a popular gestural interaction technique that suffer from co-located input and output because the user's hand can obscure the radial menu items that appear under the cursor, or two hands can interfere with each other if they both access the same part of the display. A second complication of co-located I/O is that the cursor which is essential for non co-located interaction can be very distracting during co-located interaction, especially when there is distinct lag between the user's and cursor's motion. To alleviate co-location issues, interaction techniques in Sketch are modified so that no critical information is presented in the quadrant under the pen likely to be obscured by the user's hand, and no cursor is displayed for the dominant hand since the tip of the physical pen provides sufficient feedback if calibration is good.

Although WIMP interfaces have been remarkably effective for many tasks and users, at the same time they are limiting for many 3D modeling operations. Users of WIMP interfaces consciously search through a GUI of menus and buttons to find operations, whereas pencil users subconsciously rely upon motor and perceptual skills to perform tasks. Thus, by utilizing gestural interaction, Sketch's interface literally "disappears" enabling users to focus upon their modeling task through an unobscured display of the 3D model.

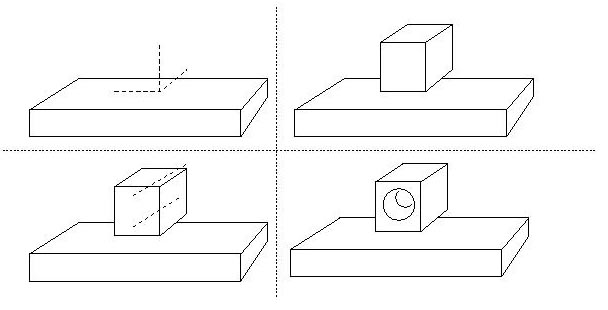

Moreover, by deriving Sketch gestures from familiar sketching idioms, Sketch leverages intuition and experience people already have for creating and interpreting pencil drawings. For example, in addition to using direct manipulation techniques for translating an object in a 3D scene, Sketch provides a "shadow" gesture that adjusts the depth of an object to match a hand-drawn shadow. Sketch also uses gestures that approximate partial line drawings of the salient features of 3D primitives to instantiate objects (create, scale, orient, and position) in a 3D environment (See figure 2). In contrast, the SketchingMetal system [3] uses a different gesture set, more appropriate for mechanical CAD, that is tuned to conventional compass and straight-edge drafting idioms.

Figure 2: Clockwise from top left : A cube is instantiated by drawing three perpendicular lines (shown dashed) meeting at a point. The new cube is automatically grouped to cube it was drawn on. A cylinder is then created by drawing two parallel lines. Since the lines are drawn into the cube, the cylinder is subtracted from the cube.

To simplify the recognition of line-drawing gestures, Sketch requires users to learn a multi-stroke gesture set. This gesture set maps possibly-ordered, short sequences of line segments to 3D modeling operations. Since each stroke of a multi-stroke gesture is delineated by a pen-down/pen-up pair, Sketch avoids the image segmentation problem encountered in most line drawing interpretation algorithms. However, the practical cost of a structured gestural interface is that Sketch users must at least memorize all operations, and to be efficient, must develop sufficient skill so that most gestures are subconsciously encoded in muscle memory. Based on observations of Sketch users and, independently, of users of the Palm Pilot's Graffiti gesture set, we believe that people can develop sufficient skill to be productive gestural interface users. Still, different users acquire skills at different rates, and more emphasis needs to be focused on refining techniques, such as those presented by Kurtenbach [10], that assist users in gesture skill acquisition.

A common early observation about the SKETCH system was that it was difficult to make precise models, despite the snapping controls that SKETCH did provide for improving accuracy. This is not surprising given that the intention of Sketch was to support rapid approximate 3D modeling. However, to confront the issue of precision, SketchingMetal was designed specifically for creating precise, machinable CAD models. These two systems illustrate four techniques for improving 3D modeling precision through: task-specific drawing, structured drawing, constraint inferencing and editing, and nongestural input.

Since the variance between different drawing tasks, such as drafting an engineering sketch of a mechanical part and blocking out an architectural space, is so wide, it is unreasonable to expect that a single general interface would be optimal for all tasks. Alternatively, by identifying pre-existing idioms specific to particular styles of drawing, powerful interfaces can be designed that are optimized to relatively narrow tasks. The notion of task-specific interfaces, although at odds with conventional computer user interface design, has a strong foundation in real-world interfaces where, for example, the tools and techniques of draftsmen are quite different than those painters.

To simplify the chore of implementing specialized Sketch interfaces, Sketch uses a YACC grammar to specify the mapping between input strokes and modeling operations. Making a different gesture set for a different application is potentially as simple as writing a new grammar file. In addition, Sketch wraps up grammars into classes so that subclasses can reimplement operations, such as how and which constraints are inferred when an object is instantiated.

4.0.2 Structured Drawing

Since most of the primitives in the SKETCH system consist of straight line features, SKETCH by default constrains all user input to be parallel to one of the three principal axes of the object that the lines are drawn above. In addition, as lines are drawn, they can be snapped to vertices and edges of existing geometries. Further precision could be gained by incorporating additional snapping techniques, such Bier's snap-dragging [1].

To draw lines that are not aligned with a principal axis or other constraint guide, the user can "tear" the line from the guide with a quick back and forth motion. However, performing a gesture to get into free-hand line mode is inappropriate since the gesture would interfere with the free-hand line; thus, an explicit mode switching operation is necessary. The alternatives used in Sketch to indicate free-hand line drawing are to press a modifier on a keyboard, or when two-handed input is available, to rotate or lift the puck held in the non-drawing hand from the tablet surface.

Unlike structured drawing, constraint inferencing attempts to clean up user input after the user is finished drawing a gesture. In SKETCH, for example, the height of an object is re-inferred when a shadow is drawn for it. In SketchingMetal, numerous constraints, such as a circle tangent to a line or to another circle(s), are inferred from a small set of primitives : points, lines and circles. Additional constraints, such as grouping newly created objects to the surface they are drawn on, are common to both systems. Yet, the difficulty with all automatic constraint inferencing mechanisms is that they often infer a wrong constraint, or they fail to infer an appropriate constraint. In Sketch, we have experimented with two mechanisms for improving the effectiveness of constraint inferencing: supporting multiple constraint interpretations, and making editing easy.

When an object is drawn in Sketch with 2D input, the placement of the object in 3D is not well-defined. Sketch has heuristics for determining placement, but often more than one possibility is reasonable. In these situations, Sketch places the object sequentially at each possible depth in the scene, leaving the object at the closest reasonable location. If this is not the intended location the user executes an UNDO operation (gesturally or with the keyboard) to move the object back to the previous possible location. As long as the number of potential depth placements is small (< 5), this technique works well.

To simplify editing operations, Sketch extends multi-stroke gestures to work in combination with direct-manipulation interactions. In order to distinguish between drawing of strokes and direct-manipulating objects, Sketch maps drawing to one stylus button and direct manipulation to a second stylus button so that manipulation can be performed without an explicit gesture. However, since there are multiple different ways 2D stylus input can map to 3D object manipulation, Sketch uses multi-stroke gestures to augment direct manipulation. Before manipulating an object, strokes can be drawn to explicitly specify either motion constraints or interaction modes. The strokes used for motion constraints are geometrically meaningful for example, drawing a line indicates a specific linear translation path whereas the strokes used to indicate an interaction mode (e.g., the letter 'S' for snapping mode) are abstract.

The final technique for improving the precision of sketching tools is to incorporate non-gestural elements such as numerical entry from a keypad, pop-up widgets, and both handwriting and speech recognition. The SketchingMetal system supports exact dimensioning of shapes through numerical features. When primitives are created or in response to user gestures, numerical text features are displayed for geometric dimensions. These text features can be linked to other text features using an extended drag-and-drop interface that also allows simple spreadsheet-like formulas to be established. In addition, voice input, hand-writing recognition, or keypad entry can be used to enter specific values for any feature.

The process of extending the functionality of a WIMP interface is rather straightforward just add a button or menu item and the user will find it. However, gestural interfaces do not scale quite as easily since gestures cannot be added without limit because of the time it takes to learn a new gesture, the effort required to recall large sets of gestures, and the strong influence details of gestures and similarity between gestures can have over user performance and system robustness. For example, an early version of Sketch provided a gesture for deleting objects that involved "flicking" the object away with a quick stylus motion. Although this interaction seemed reasonable in the early design, as the gesture set grew more complex and the modeling system gained functionality we found that flicking was too imprecise and conflicted with other interactions, especially the fast movements of skilled users. Through multiple iterations of the Sketch interface, we have identified a range of techniques for extending system functionality, including : physical buttons, mnemonics, context-sensitive gestures, widgets, and two-handed interaction.

5.0.1 Buttons

One of the simplest methods for extending a gestural interface is to map new gestures to distinct physical buttons. The Sketch system makes use of a stylus that effectively has four buttons. The tip of the stylus is one button and is used for all drawing operations. The lower button of a two-button rocker on the shaft of the stylus is used for manipulating (translating, rotating, scaling) objects, and the upper button of the rocker is used for camera manipulation. The eraser of the pen is used to invoke abstract editing gestures, such as undo and redo.

Although the methodology of mapping functionality to physical buttons is very simple and allows rapid mode transitions, it offers only limited scalability. Even the two buttons along the stylus shaft are difficult for some people to press because of the way they grip the stylus. Adding additional buttons to the desktop is a possibility, but makes the interface less physically mobile and increases the coordination required of users.

5.0.2 Mnemonics

As abstract operations are added to the system, it can be more difficult to remember the gesture that invokes them. The importance of establishing strong mnemonic relationships between gestures and operations is inversely proportional to the frequency of the gesture's use. As a rule, we have found it sufficient to map single-stroke gestures, recognized by Rubine's system [13], that resemble alphabetic characters to operations that begin with the same letter. For example, a color wheel is invoked through a gesture like the letter 'C'. However, for more frequently used operations, we prefer simpler gestures: undo and redo functions are invoked by gesturing away from or toward the body with the eraser of the stylus. As our system complexity has increased, we have also begun to map entire words, recognized with Paragraph International's handwriting recognizer, to our least frequently used operations.

5.0.3 Context-Sensitivity

To further scale our gesture sets, Sketch activates different gestures sets depending on the context. Thus, although a modeling system might have hundreds of operations, most of them do not apply to all 3D modeling contexts. For example, SketchingMetal has two distinct gestures sets for floor-plan operations and for 3D operations. The active gesture set is selected based on whether the user is viewing the model from directly above (floor-plan) or from an angle (3D). More subtle modifications can be made to gesture sets depending on the object the cursor is over. Thus, gestures drawn on top of a floor might be interpreted differently from gestures drawn on a wall.

5.0.4 Widgets

Although gestures and linguistic mnemonics can be used to naturally express many concepts, there are still operations that are best expressed through a more conventional search-oriented interface. For example, to a limited extent selection of color can be done with words or gestures, but in general color selection requires a visual representation of a color space that the user can search through. Sketch uses the concepts of 2D and 3D widgets [5], and Tool Glasses and Magic Lenses [2] to support operations ranging from color/texture selection, to viewing deleted objects, to saving and restoring camera locations.

5.0.5 Two-Hands

The final approach to extending the functionality of Sketch is based upon two-handed interactions. Not only can more gestures be distributed between both hands, but gestures can also be made simpler and more powerful. For example, with two-handed interaction objects can be intuitively ungrouped by pinning one object with one hand while manipulating the grouped object with the other. Moreover many complex operations, especially 3D camera and object control, are simplified since interaction does not have to be artificially decomposed into sequences of one-handed operations. For example, an object can be simultaneously translated and rotated by just grabbing two points on it, one with each hand, and then moving each hand independently. Other familiar real-world interactions can be exploited to simplify 3D camera control [15] and even to improve menu selection where a menu (or tool palette), attached to the non-dominant hand, can be brought to the dominant hand for menu selection without the user losing visual focus [11]. To alleviate the problem of interference between hands, Sketch offsets objects held in the non-dominant hand so that both hands can logically occupy the same point on the display.

The Sketch systems indicate the potential viability of gestural interfaces for 3D modeling. The multi-stroke gesturing style bears a strong resemblance to conventional sketching since stylus input is drawn directly on the display surface. However unlike sketching interfaces, gestural interfaces challenge interface designers to define gesture sets that are both natural for the user and interpretable by the computer as specific 3D modeling primitives and operations. Through iterative design and testing, Sketch demonstrates that gestural interfaces can be applied to large-scale applications, even those oriented toward precise CAD modeling. Based on this design experience, we presented a range of techniques for improving the precision of gestural interfaces, including structured task-specific drawing, constraint inferencing and editing, and even selective use of non-gestural input. In addition, we identified a number of considerations for making gestural systems scalable to real 3D modeling systems including the use of physical buttons, mnemonic associations, context-sensitivity and bi-manual input.

Although this paper focuses on user interface techniques for defining conventional computer 3D model representations, complementary research should focus on making underlying 3D model representations more amenable to sketching interfaces. In particular, we are currently researching model representations that will conveniently support gestural interfaces for sketching free-form 3D models.

Finally, a continuing challenge exists to develop techniques for gestural interfaces that simplify the transition from unskilled novice to skilled expert. Although marking menus [10] offer an existence proof that elegant transitions exist, more thorough research needs to focus on more general results that would apply to the multi-stroke geometric gestures of the Sketch system. The current approach for learning Sketch's gestures is to work with an already expert user in much the same way that artists learn techniques from a master. A more promising alternative might be to pursue the integration of menu based widgets with gestural elements, as indicated by [9].

Special thanks to Joe LaViola for his careful reading of this paper. This work is supported in part by the NSF Graphics and Visualization Center, Advanced Networks and Services, Alias/Wavefront, Autodesk, Microsoft, Sun Microsystems, and TACO.

[1] E.A. Bier. Snap-dragging in three dimensions. Computer Graphics (1990 Symposium on Interactive 3D Graphics), 24(2):193-204, Mar. 1990.

[2] Eric A. Bier, Maureen C. Stone, Ken Pier, William Buxton, and Tony DeRose. Toolglass and Magic Lenses: The see-through interface. In James T. Kajiya, editor, Computer Graphics (SIGGRAPH '93 Proceedings), volume 27, pages 73-80, August 1993.

[3] Mark Bloomenthal, Robert Zeleznik, Russ Fish, Loring Holden, Andrew Forsberg, Rich Riesenfeld, Matt Cutts, Sam Drake, Henry Fuchs, and Elaine Cohen. Sketch-n-make: Automated machining of cad sketches. In Proceedings of the 1998 ASME 8th Computers In Engineering Conference, 1998.

[4] V. Branco, A. Costa, and F.N. Ferriera. Sketching 3D models with 2D interaction devices. Eurographics '94 Proceedings, 13(3):489-502, 1994.

[5] D. Brookshire Conner, Scott S. Snibbe, Kenneth P. Herndon, Daniel C. Robbins, Robert C. Zeleznik, and Andries van Dam. Three-dimensional widgets. In David Zeltzer, editor, Computer Graphics (1992 Symposium on Interactive 3D Graphics), volume 25, pages 183-188, March 1992.

[6] Andrew Forsberg, Joseph LaViola Jr., Lee Markosian, and Robert Zeleznik. Seamless interaction in virtual reality. IEEE Computer Graphics and Applications, 17(6), 1997.

[7] Andrew Forsberg, Joseph LaViola Jr., and Robert Zeleznik. Ergodesk: A framework for two and three-dimensional interaction at the activedesk. In Proceedings of the 2nd International Immersive Projection Technology Workshop, Ames, Iowa 1998, 1998.

[8] Yves Guiard. Asymmetric division of labor in human skilled bimanual action: The kinematic chain as a model. The Journal of Motor Behavior, 19(4):486-517, 1987.

[9] Gorden Kurtenbach, Thomas P. Moran, and William Buxton. Contextual animation of gestural commands. In Proceedings of Graphics Interface '94, pages 83-90, Banff, Alberta, Canada, May 1994. Canadian Information Processing Society.

[10] Gordon Kurtenbach and William Buxton. User learning and performance with marking menus. In Proceedings of ACM CHI'94, pages 258-264, 1994.

[11] Gordon Kurtenbach, George Fitzmaurice, Thomas Baudel, and William Buxton. The design and evaluation of a GUI paradigm based on tabets, two-hands, and transparency. In Proceedings of ACM CHI'97 Conference on Human Factors in Computing Systems, 1991.

[12] D. Pugh. Designing solid objects using interactive sketch interpretation. Computer Graphics (1992 Symposium on Interactive 3D Graphics), 25(2):117-126, Mar. 1992.

[13] D. Rubine. Specifying gestures by example. Computer Graphics (SIGGRAPH '91 Proceedings), 25(4):329-337, July 1991.

[14] W. Wang and G. Grinstein. A survey of 3D solid reconstruction from 2D projection line drawings. Computer Graphics Forum, 12(2):137-158, June 1993

[15] Robert C. Zeleznik, Andrew S. Forsberg, and Paul S. Strauss. Two pointer input for 3d interaction. Computer Graphics (1997 Symposium on Interactive 3D Graphics), pages 115-120, April 1997.

[16] Robert C. Zeleznik, Kenneth P. Herndon, and John F. Hughes. Sketch: An interface for sketching 3d scenes. Computer Graphics (SIGGRAPH '96 Proceedings), pages 163-170, August 1996.